23 Apr Dealing with backups when you have limited access to the office

Linus Chang CEO of BackupAssist recently posted a great blog post article on ‘Backups and preparing for a COVID-19 Lockdown’. In this, he highlights three key problems that Backup Administrators face when considering their backup policies.

Linus Chang CEO of BackupAssist recently posted a great blog post article on ‘Backups and preparing for a COVID-19 Lockdown’. In this, he highlights three key problems that Backup Administrators face when considering their backup policies.

- no one is there to swap out the backup disks

- when the cat’s away, the mice will play – hackers, thieves, saboteurs

- slower detection of ransomware events

In addition to his excellent comments, we would like to add the following observations and suggestions that we think Backup Administrators will find helpful when thinking about their current backup strategy.

How to best alter your rotational backups when no one is in the office to change them

The most obvious issue with this lockdown for IT administrators is the sudden inability to physically access servers. These critical servers still need a strong backup but where historically this has been provided by rotating two or more media on a rotation, suddenly there is no-one there to do this simple task and take the media offsite.

So what are your options?

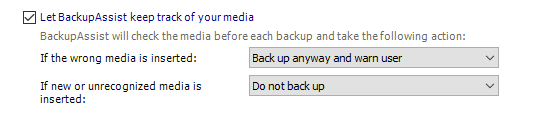

Well you could just rely on the media checking warning to alert you that the wrong media is inserted but let the backup continue never the less. This will still backup but you suddenly have lost all the benefits of rotating media.

However, this leaves all your eggs in one basket and you are relying on a single media for a long period of time. If this media develops an issue you could potentially lose ALL of your recent backups. It’s therefore not something we would typically recommend on its own but as long as you have additional backups running to other media or destinations offsite, it can still form part of a good overall backup strategy.

In some cases such as USB hard drives, you can attach more than one media to a server and duplicate a job so that one job runs on defined days to one media and another runs to the second media on the other days. This will help with providing some protection against a single media failing but even if you have several backup media permanently attached you still don’t have any ‘Offsite backup’ protection and so we would recommend adding an additional job to a new remote destination.

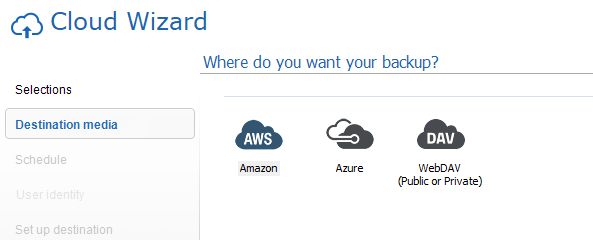

Adding an additional cloud backup

The Cloud backup engine in BackupAssist can be used to backup files, folders and applications to one of three different cloud backup destinations:

- Microsoft Azure Storage locations

- Amazon S3 Object Stores

- Remote WebDav devices such as remote NAS devices

You don’t have to back up the exact same data you would to local media, but we would recommend that you do include the most critical business data. With a Cloud backup, even though typically 7 days of backup retention is kept, you will benefit from the built-in de-duplication and compression such that usually only 2-3 times the size of the source data is needed on the backup destination to store the backups. So when you are trying to work out storage costs for cloud storage providers we would recommend you use this figure initially to get a base idea of required storage size. Once you have a weeks worth of backups you can then use the cloud providers own tools to get an accurate cost.

Please note that BackupAssist does not include any storage costs you must provide your own storage accounts on one of the providers.

If you want to use the Cloud backup engine to backup to a remote NAS device (this is a popular option) then there is no additional storage cost as you own the NAS device but a similar destination space requirement will be needed.

It’s also worth noting that the initial backup may be very large and will potentially take a long time to run. As the destination will be remote, there are options to seed this using local media, but without direct physical access to the server being backed up this may potentially be difficult to accomplish at the moment.

Keeping track of the backup status

The last thing you want to do when you are so reliant on backups is to miss a potential issue, so it’s critical that you check you are receiving email reports when backups run.

If you do see issues, especially ones that may suggest media corruption or write issues, you need to get on top of them fast. So as well as making sure you have configured the reports to go to a mailbox that is being checked, we strongly suggest you make sure you have a mechanism in place to allow you to remotely access and if needed reboot servers that are seeing issues.

It may be that you also need to set up temporary backup jobs to new destinations and without direct access to the console, you will need some form of remote access to do this.

For those administrators with multiple servers to manage the BackupAssist Multisite Manager has a built-in remote management tool to help you to take control of the remote instances of BackupAssist consoles without the need to open up any firewall rules on each site.

The higher threat of ransomware attack from new less trusted connections

There is no doubt that there is a sudden increase in users needing to work from home and as a result, you may find you are having to quickly set up access to company documents from new and un-trusted PC’s. The quick deployment of office VPN’s and document access methods have opened up the office network to a new range of potential threats and entry points. Therefore, it goes without saying that having a good backup policy is imperative at this time. We would recommend you take special care to configure the CryptoSafeGuard scanning and shield options in BackupAssist. This option is designed to pick up on any potential results of a cryptoware attack and protect your backups from being infected. With more customers relying on onsite backups this is more important than ever before.

So to summarise we would recommend you make sure you …

- have active upgrade protection on your BackupAssist keys as this is needed for CryptoSafeGuard scanning to work.

- keep a regular eye on your backup reports.

- consider enabling the SMS alerting in CryptoSafeGuard should a detection be made so you can quickly resolve it.

- setup an offsite backup of critical data.

- have at least one offsite system backup – even if this is weeks old it will get you to a known good point in time quickly to then enable you to further restore more recent file and application data.